Ring Attention with Blockwise Transformers for Near-Infinite Context

For session 4 of the EleutherAI ML Scalability & Performance reading group, I gave a presentation covering the seminal paper “Ring Attention with Blockwise Transformers for Near-Infinite Context Length.”

I also cover 2 key pieces of prior work which provide the foundation for ring attention, to understand what the limitations were of those prior approaches and how ring attention built on them to unlock massive gains in max sequence length for transformer models.

Papers:

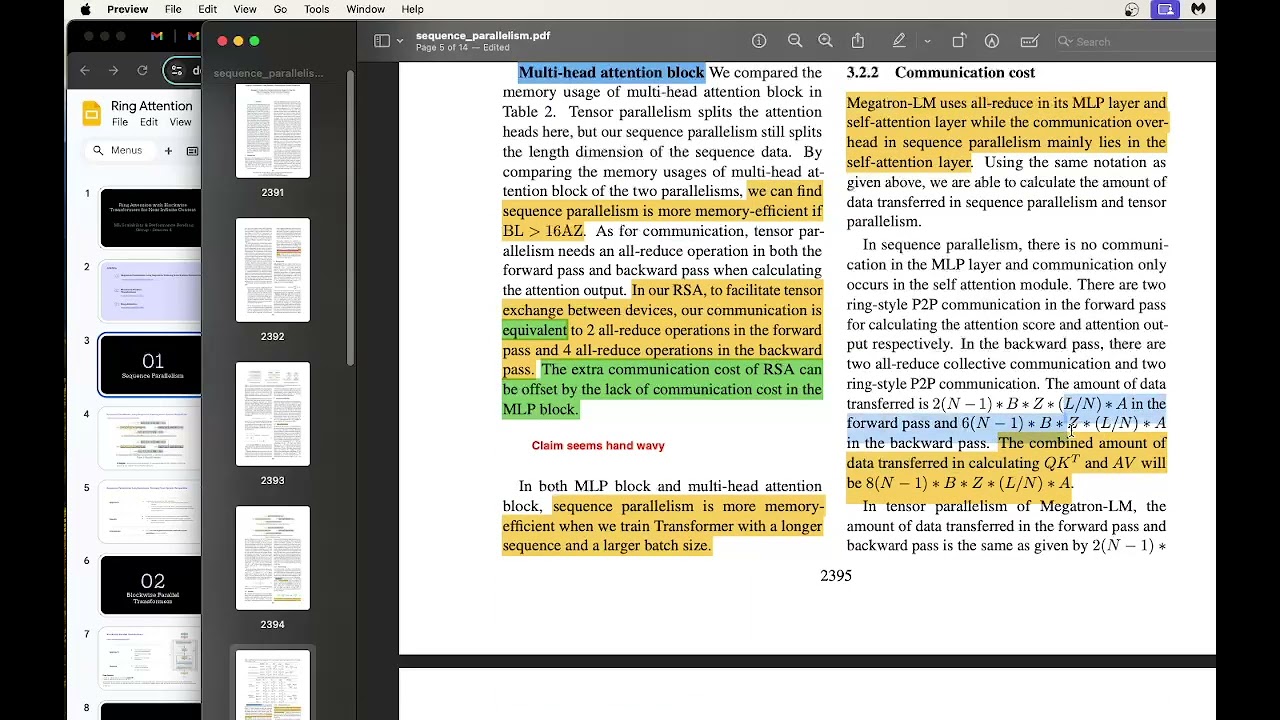

- Sequence Parallelism: Long Sequence Training from System Perspective

- Blockwise Parallel Transformer for Large Context Models

- Ring Attention with Blockwise Transformers for Near-Infinite Context

Recording: